Scaling a governance model

Credo AI

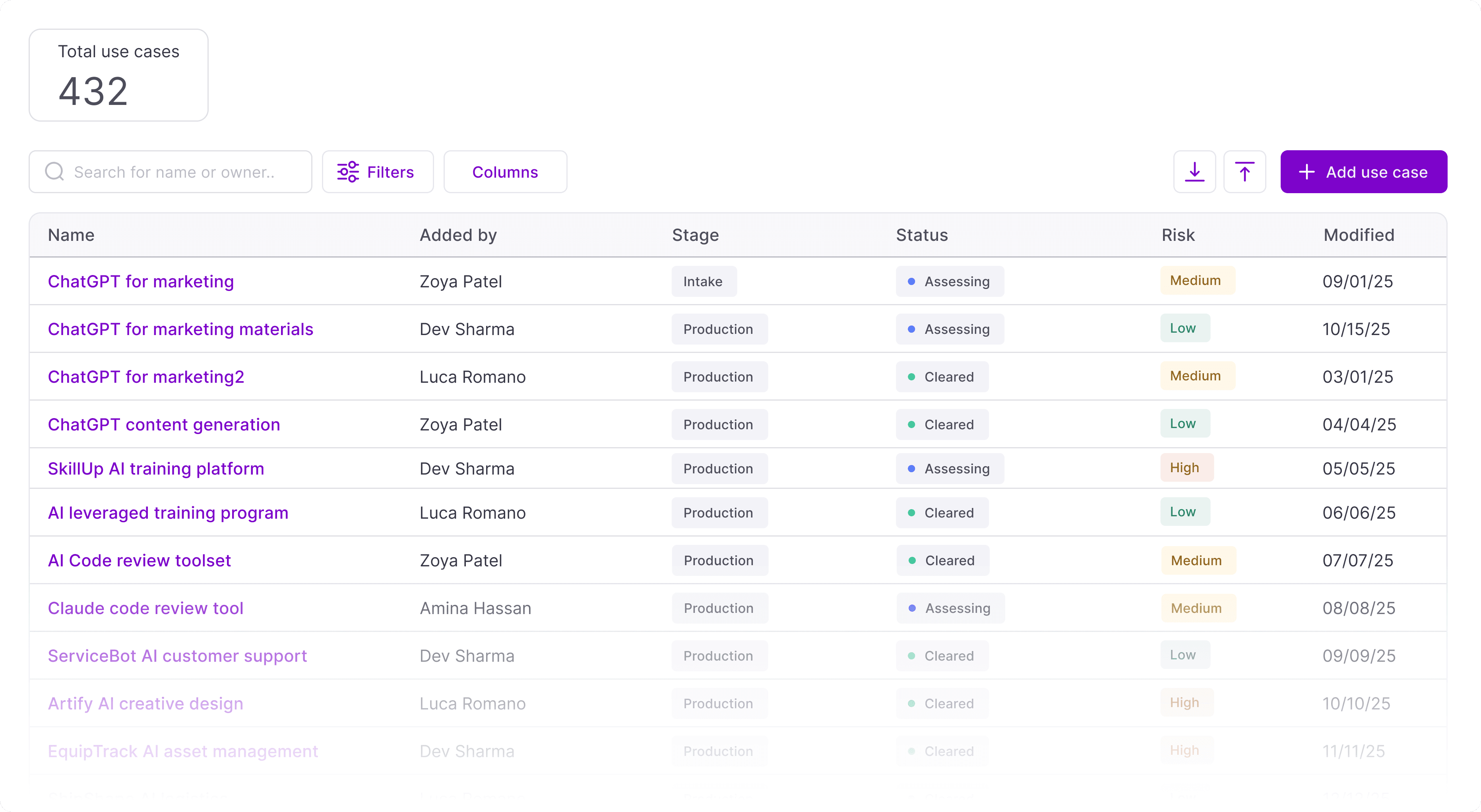

As AI adoption scaled, teams registered the same tools repeatedly under slightly different use cases. Each instance required separate review, ownership, and maintenance—fragmenting context and multiplying governance effort without improving oversight. What looked like growth was actually duplication.

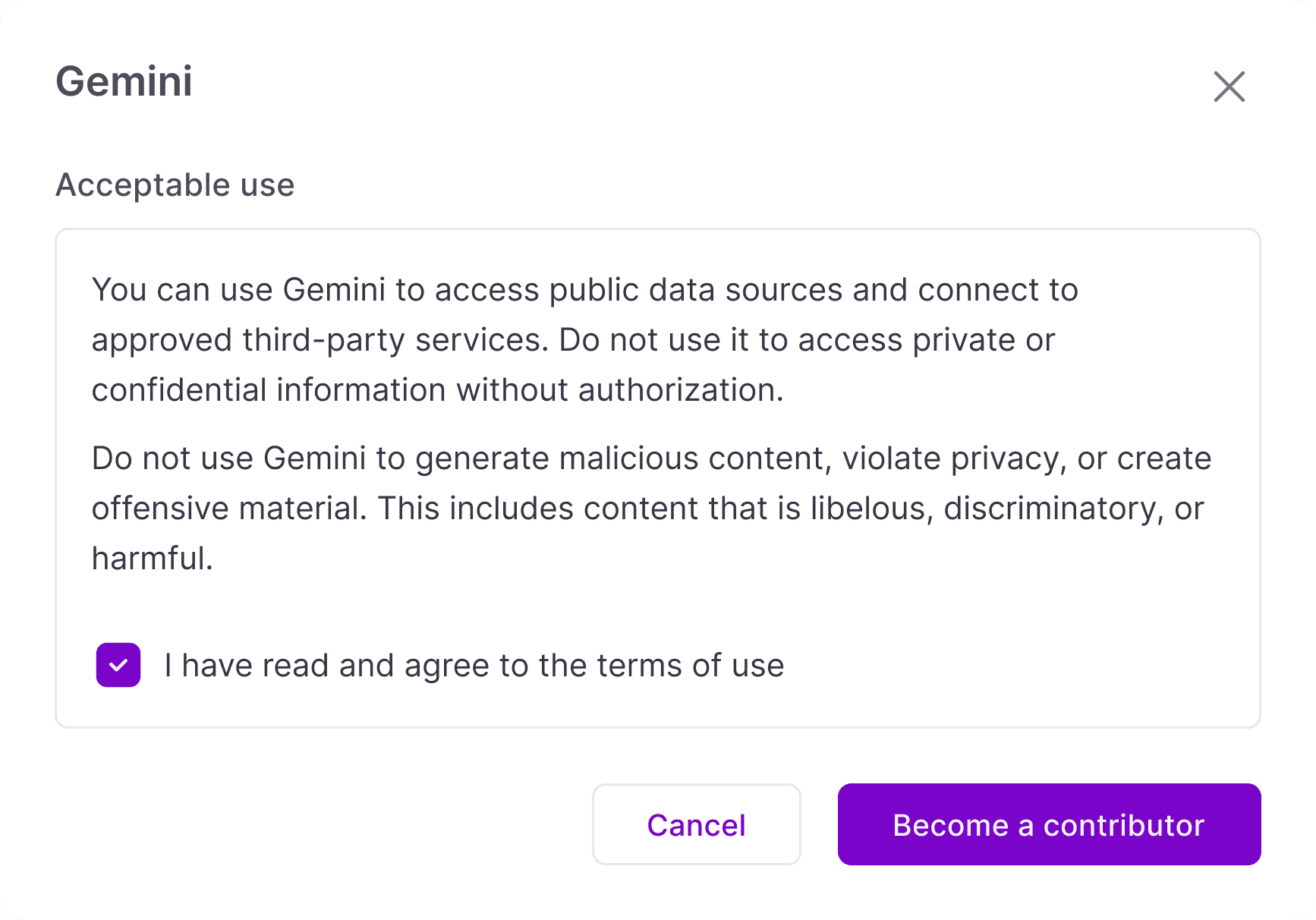

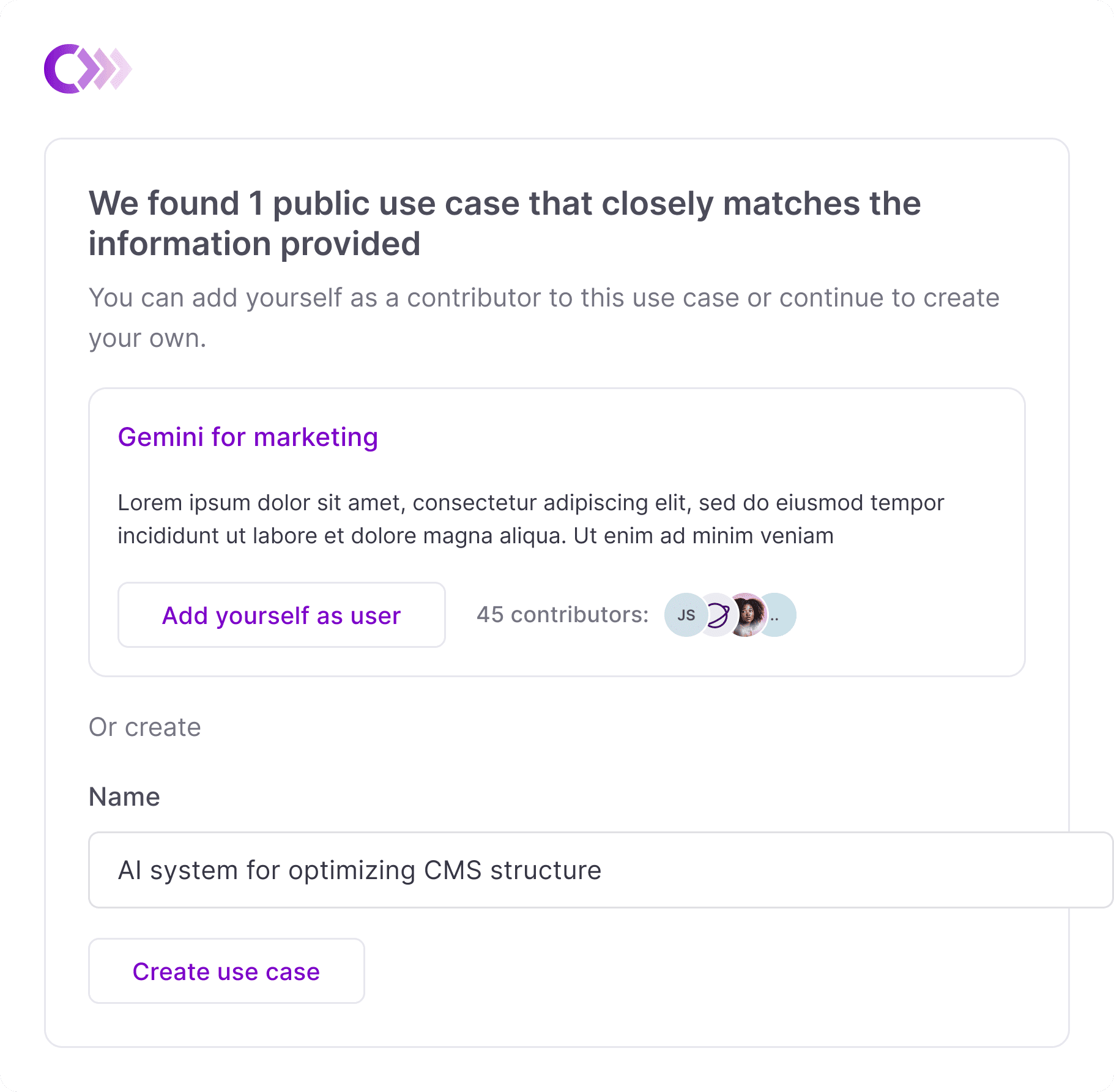

The breakthrough was elevating "acceptable use" to a first-class governance artifact. This required designing eligibility criteria, workflows, and system rules that let users join existing governed systems rather than create duplicates. Governance shifted from approving individual requests to defining acceptable participation—collapsing redundancy while preserving accountability.

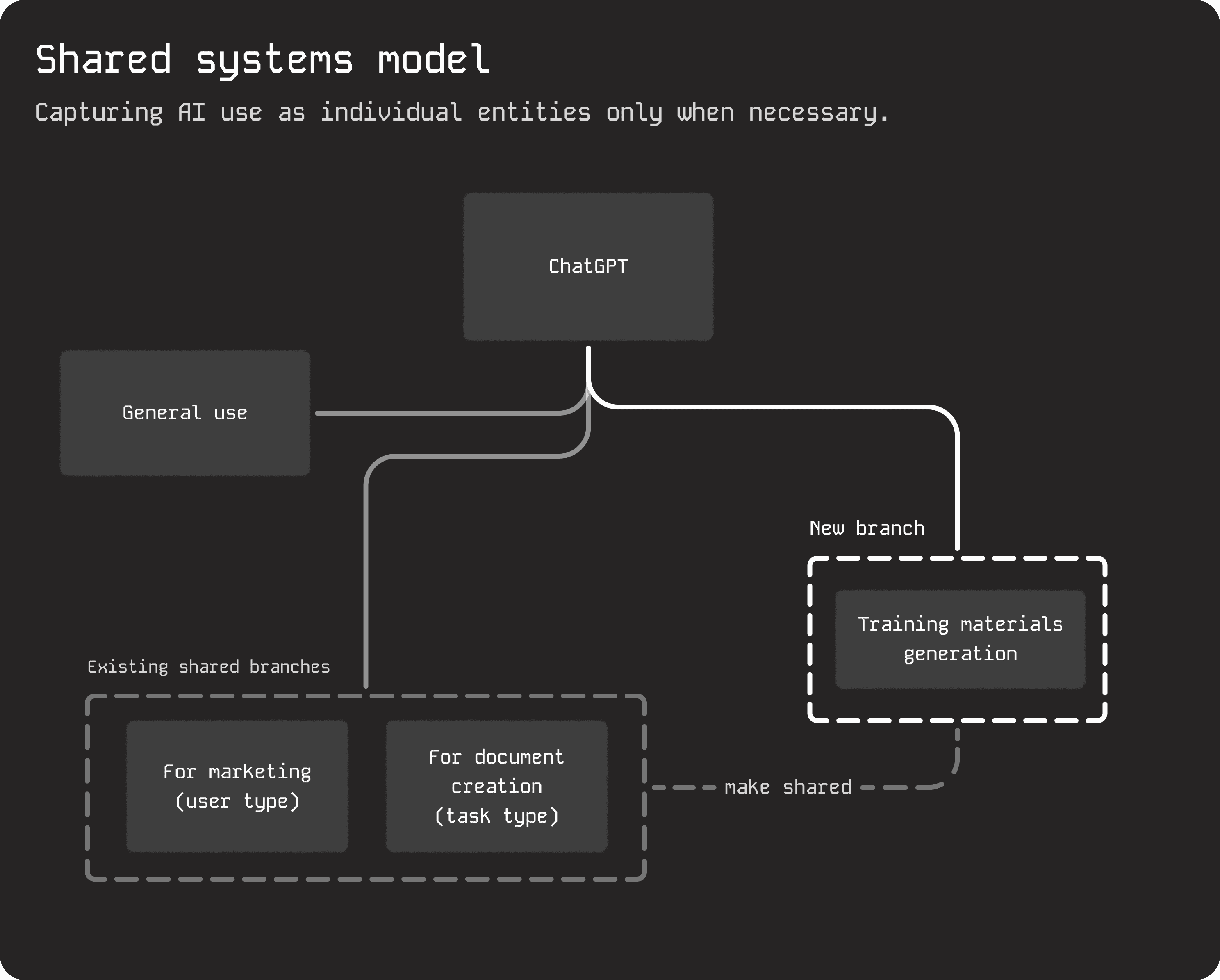

Acceptable use converged broad, low-risk AI usage into a single governed system, collapsing duplication and establishing a baseline for oversight. The system can branch: shared systems extend into usage-specific sub-entities with their own policies and constraints. This preserves centralized governance for common patterns while allowing specialized usage to evolve independently—introducing private use cases only when necessary.

Discovery builds awareness, but prevention happens at registration. The AI-assisted flow evaluates user intent and surfaces existing systems with overlapping purpose, prompting an explicit choice: join an existing system or explain why a new use case is needed. This turns duplication from an accidental outcome into a deliberate decision, preventing fragmentation while still allowing warranted exceptions.

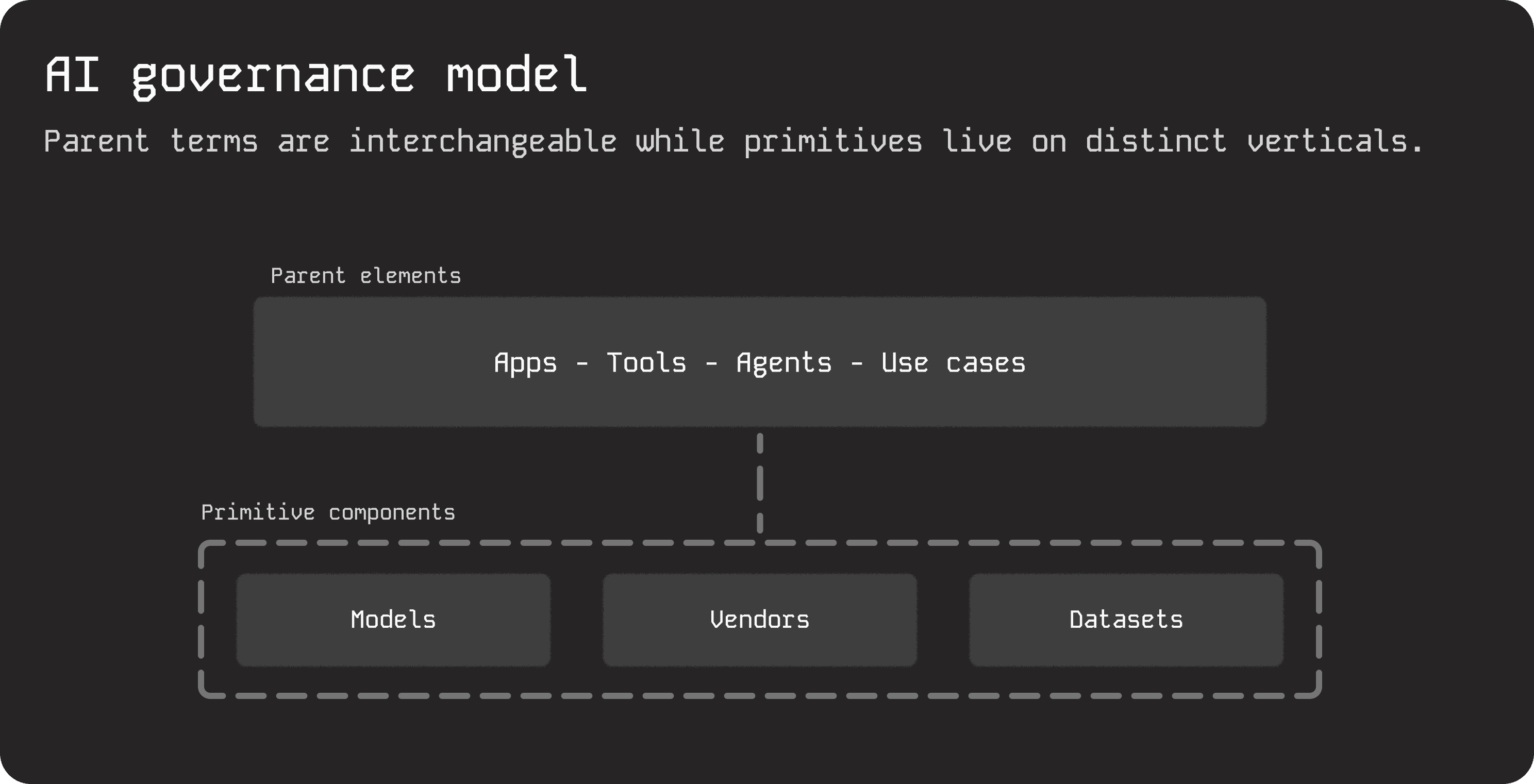

Between 2022 and 2025, enterprise AI evolved rapidly—from isolated use cases to external tools to agent-based systems—but models, vendors, and datasets remained constant. Terminology debates stalled progress. By proposing a technical model with customizable parent terms and global reclassification controls, we gained flexibility to move forward despite evolving language.

In the short term, AI discovery, duplication prevention, and out-of-the-box policies streamlined use case creation and reduced registry clutter. Long-term, a shared model that branches as usage becomes specific supports increasing complexity without fragmenting governance.

This work reframed AI governance from reviewing individual use cases to defining durable boundaries for participation. By operationalizing acceptable use within discovery and registration flows, it demonstrates how design can make governance upstream, concrete, and scalable—shifting focus from managing instances to shaping infrastructure.