Translating intent into data

Credo AI

As AI usage scales: manual registration forms create bottlenecks: users struggle with terminology, submissions lack consistency, and governance teams spend time interpreting rather than evaluating risk.

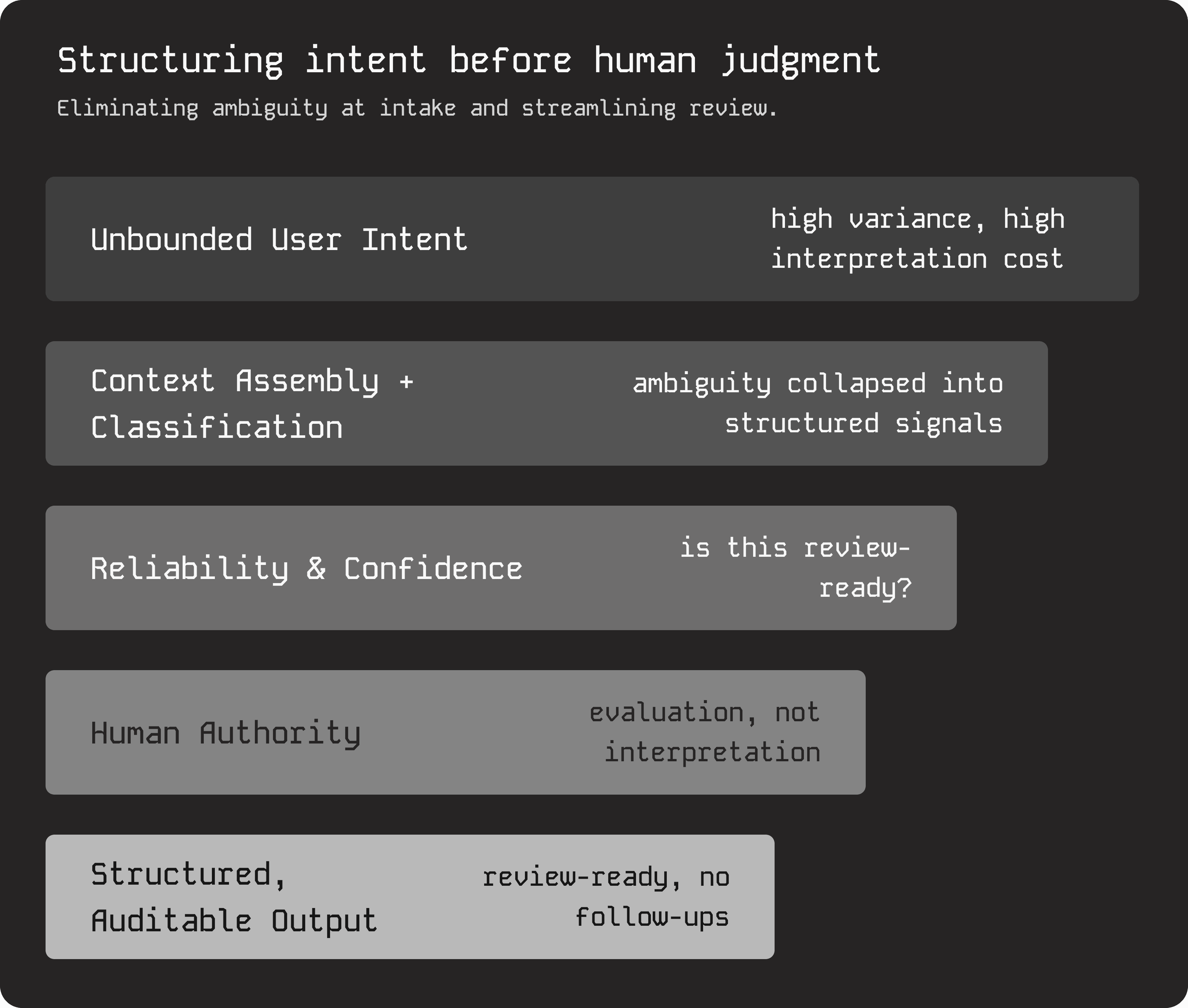

I designed AI Assist to bridge this gap by structuring intent—translating natural language into explicit signals, surfacing assumptions, and highlighting uncertainty—without automating approval decisions.

Our initial prompt presents a design trade-off: ask users for comprehensive details upfront, or let them start small and gradually delegate more to the AI as they build trust.

I chose to encourage detailed input from the start, while still allowing users to begin with minimal information if they prefer.

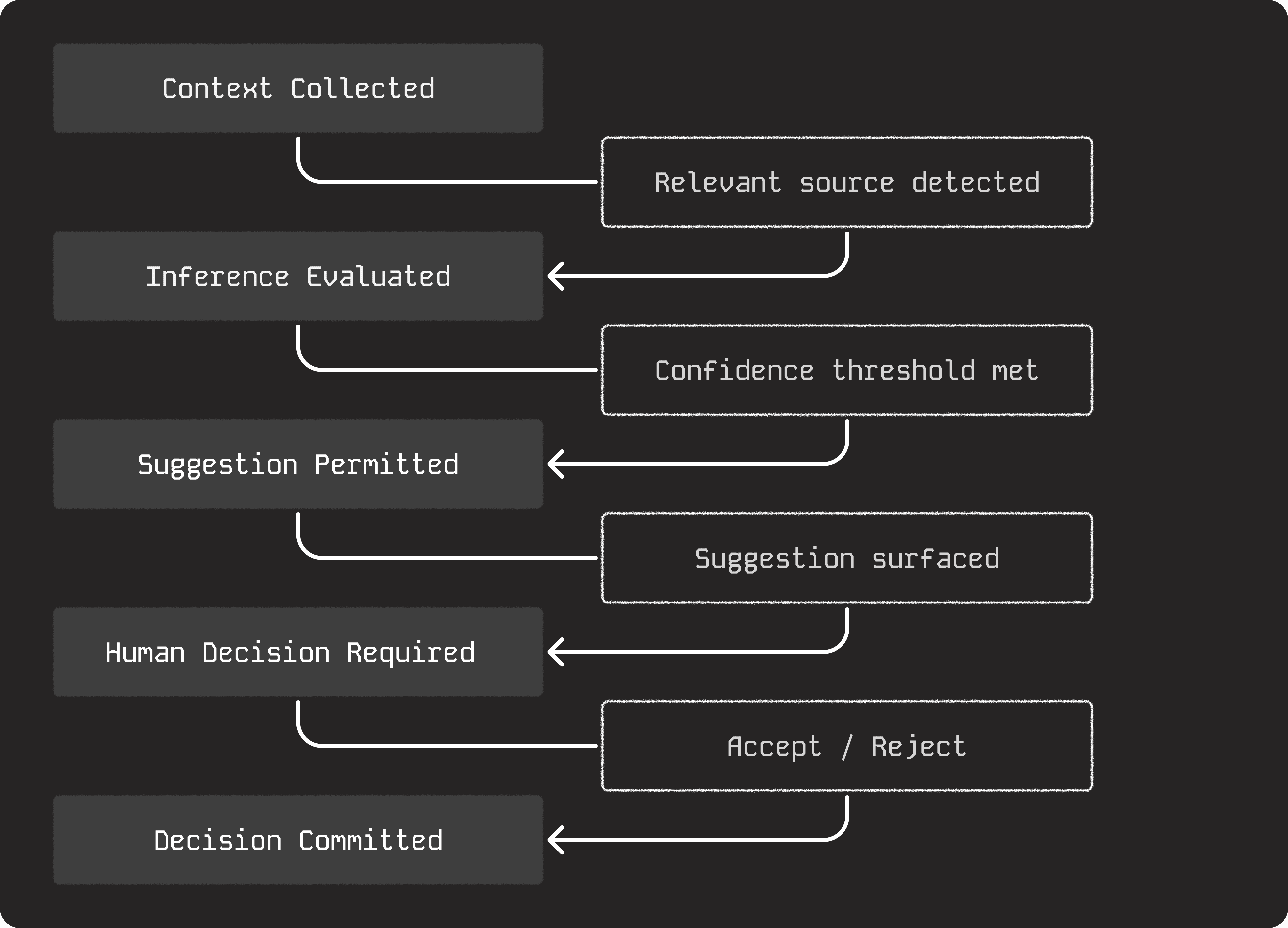

Before taking any action, Assist briefly pauses to assess context completeness and may ask up to two clarifying questions: building trust while improving downstream output quality, at the cost of minimal pacing.

Before AI begins more complex actions, users confirm initial selections and add key details. This focuses user attention on critical moments and surfaces friction where user language diverges from governance terminology.

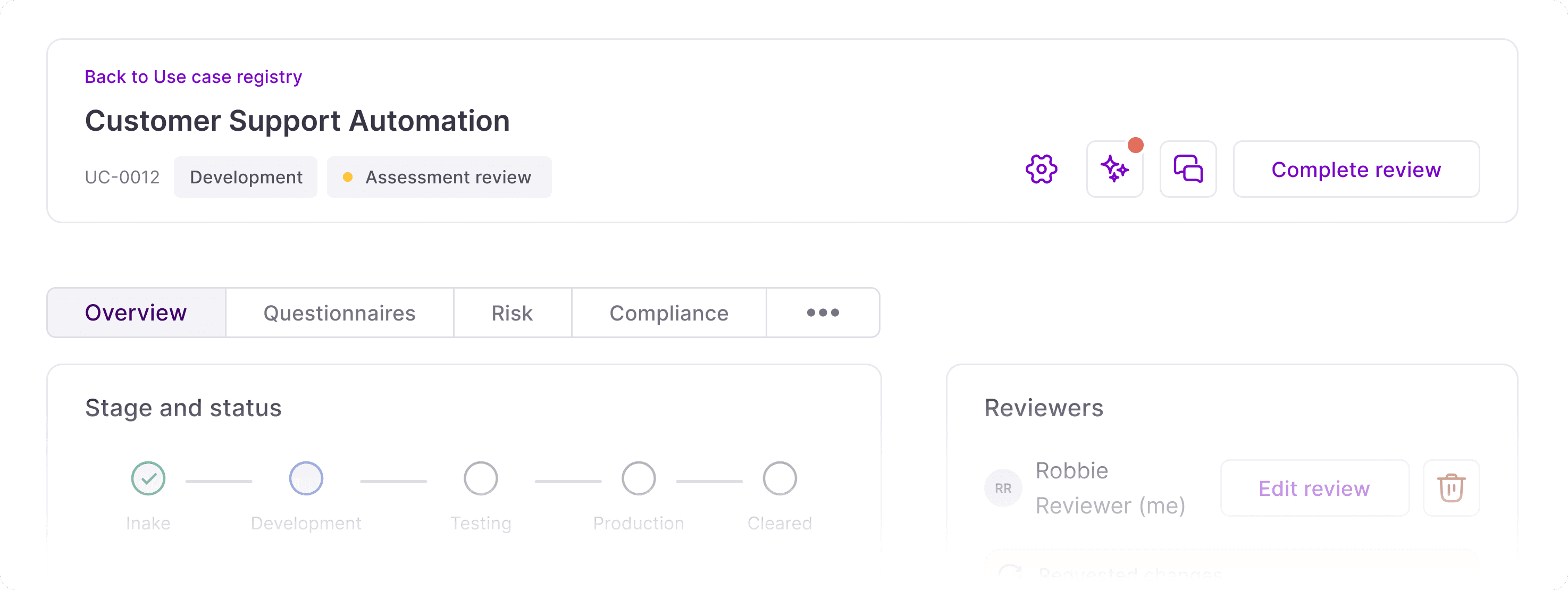

Once a use case is registered, AI Assist moves to a dedicated workspace accessed from the toolbar—creating a persistent surface for AI activity while separating AI collaboration from human discussion. Users can move intentionally between working with the system (Assist) and coordinating with teammates (Comments) without those modes overlapping.

AI Assist maintains a persistent workspace where system activity and context remain visible during review. When it can't proceed, it guides users to the lowest-friction next step, avoiding stalls and ambiguous failures.

What users see is the final moment of a longer decision process. Before showing any suggestion, Assist evaluates whether enough context exists to make it meaningful and reviewable.

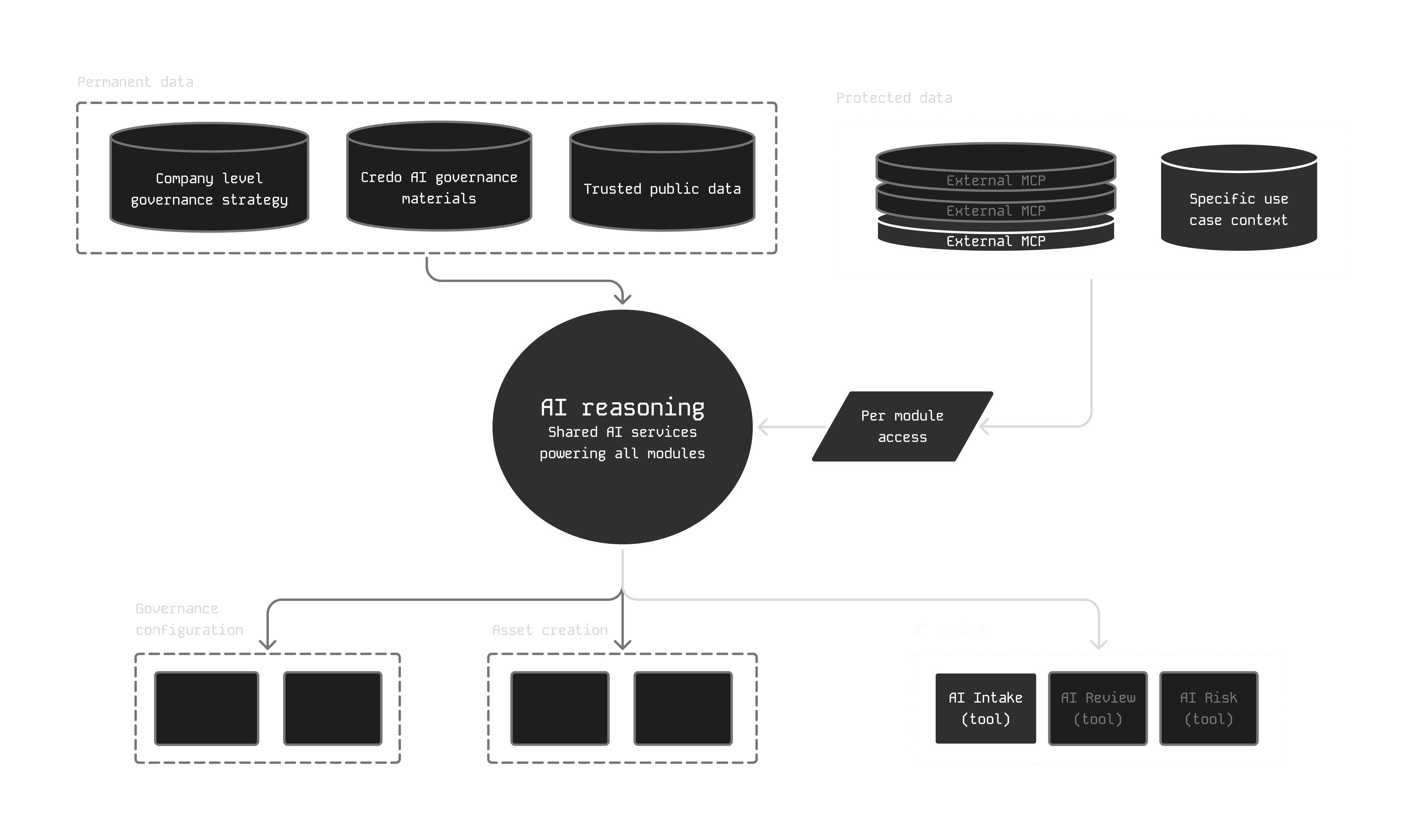

AI Assist is designed for governance from the outset: context is controlled, outputs are constrained, sensitive data is protected, and every action is recorded for traceability and audit. This foundation becomes critical as the system scales toward more sensitive workflows where ambiguity or silent failure would be unacceptable.

By collapsing use case submission into a single structured interaction, AI Assist replaced weeks of back-and-forth with review-ready submissions completed in one session. Clearer inputs shifted governance teams from interpreting intent to evaluating risk, cutting average review time roughly in half without automating judgment.

As AI adoption grows, systems like AI Assist matter not because they replace human judgment, but because they make it scalable—through clearer intent, better inputs, and faster, more confident review.